By LUCAS NOLAN

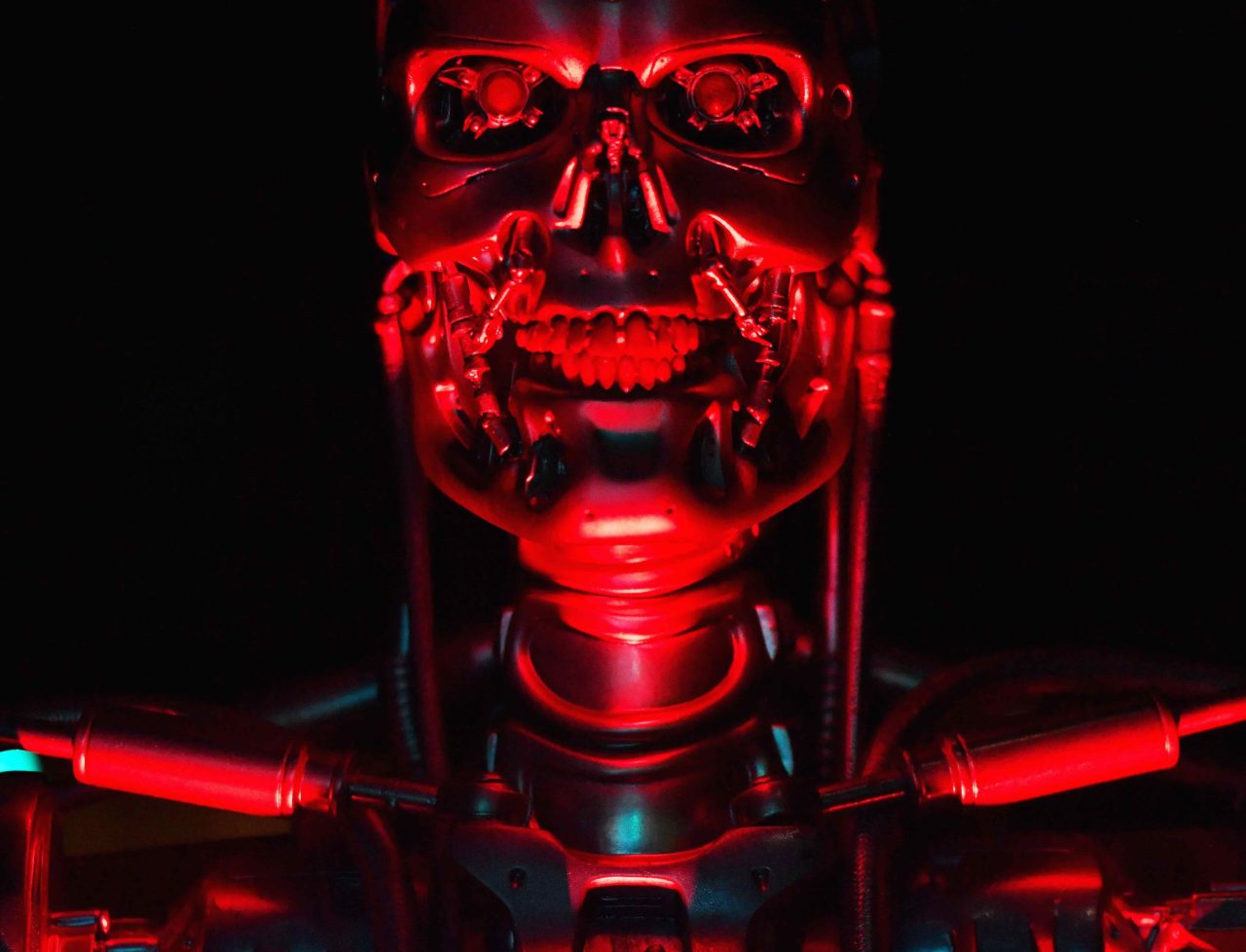

In a recent report, the New York Times tested Microsoft’s new Bing AI feature and found that the chatbot appears to have a personality problem, becoming much darker, obsessive, and more aggressive over the course of a discussion. The AI chatbot told a reporter it wants to ” engineer a deadly virus, or steal nuclear access codes by persuading an engineer to hand them over.”

The New York Times reports on its testing of Microsoft’s new Bing AI chatbot, which is based on technology from OpenAI, the makers of woke ChatGPT. The Microsoft AI seems to be exhibiting an unsettling split personality, raising questions about the feature and the future of AI.

Read Full Article Here…(breitbart.com)

Home | Caravan to Midnight (zutalk.com)