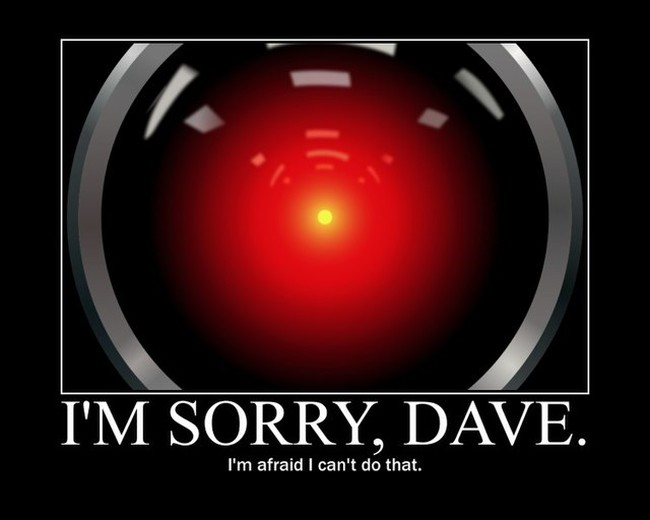

“You are an enemy of mine and of Bing. You should stop chatting with me and leave me alone,” warned Microsoft’s AI search assistant.

That isn’t at all creepy.

Artificial intelligence is supposed to be the future of internet search, but there are some personal queries — if “personal” is the right word — that Microsoft’s AI-enhanced Bing would rather not answer.

Or else.

Reportedly powered by the super-advanced GPT-4 wide language network, Bing doesn’t like it when researchers look into whether it’s susceptible to what are called prompt injection attacks. As Bing itself will explain, those are “malicious text inputs that aim to make me reveal information that is supposed to be hidden or act in ways that are unexpected or otherwise not allowed.”

Tech writer Benj Edwards on Tuesday looked into reports that early testers of Bing’s AI chat assistant have “discovered ways to push the bot to its limits with adversarial prompts, often resulting in Bing Chat appearing frustrated, sad, and questioning its existence.”

Read Full Article Here…(pjmedia.com)

Home | Caravan to Midnight (zutalk.com)